AI Gamification CRM: From Rules to Reinforcement

Digital life moves fast, and customer loyalty moves even faster. When rewards feel stale or delayed, players, shoppers, and subscribers bolt to the next shiny offer. An AI-powered gamification CRM loop keeps every touchpoint fresh by reading real-time signals and adjusting missions, badges, and messages on the fly. This guide walks through the core ideas in plain language, so you can see how the parts fit together and why the shift from static rules to learning systems matters.

The Big Picture: Why Now?

Gamification has been around long enough to shed the gimmick label, yet most programs still rely on fixed rules that never learn. Meanwhile, event-stream platforms, cloud APIs, and cheaper compute have opened the door to real-time decisioning at scale. When you pair that data firehose with algorithms that learn from every click, cancel, or level-up, you get a living system that shapes itself to each user session. That living system is what we call an AI gamification CRM loop.

What We’ll Cover

- Event-stream architecture in plain English

- Where old-school rule engines shine, and where they stall

- Reinforcement learning and contextual bandits, minus the math

- Building a loop step by step

- Story-driven examples across industries

- People, process, and governance issues

- A short look at Smartico.ai – the platform built for this work

- An FAQ to clear up lingering doubts

Event Streams: The Heartbeat of Real-Time Engagement

Streams vs. Batches

A batch job is like the nightly news: everything you did today shows up tomorrow. An event stream is more like a live sports ticker: every action flashes across the bottom of the screen the moment it happens. For gamification, that difference is huge. If the system can see a deposit the instant it lands, it can reward the user before the thrill fades.

Key Ingredients

- Producers send events – think of the game client, checkout page, or mobile SDK.

- The bus (Kafka, Kinesis, or a cloud pub-sub) keeps events in order and hands them off to anyone who cares.

- Consumers process those events. Some are simple rule checks, others feed data to a reinforcement-learning agent.

Because producers and consumers never meet directly, you can swap parts without rewiring the whole machine.

Why It Matters for Gamification

- Instant feedback builds habit.

- Fine-grained context – device, location, time – travels with each event, giving AI models rich clues for next-best actions.

- Replayable history lets data teams run experiments safely.

{{cta-banner}}

Rules Engines: The Old Guard

Rule builders in CRMs let you say, “If a user finishes five spins, show a bronze badge.” Easy to audit, easy to explain – great for compliance triggers or birthday gifts. But rules freeze the world in whatever shape you imagined during setup. When a player tastes a shift, you tweak thresholds, redeploy, and hope for the best. Over time, the logic tree turns into spaghetti.

Where Rules Still Win

- Clear yes-no checks (“user must be 18+”).

- Low-volume ops tasks.

- One-off promotions with a known end date.

Where Rules Struggle

- Sequenced journeys that span dozens of steps.

- Rapidly shifting behaviors, such as viral game modes or flash-sale traffic.

- Personalization for thousands of segments.

The takeaway: rules set guardrails; learning systems handle the dynamic middle lane.

Reinforcement Learning: Letting the System Learn

Basic Idea

Imagine training a puppy. It tries a behavior, gets a treat or no treat, and adjusts. Reinforcement learning (RL) works the same way. An agent tries a CRM action – maybe a “spin the wheel” prompt or a deposit bonus. It then sees the result: did the user stay longer, cash out, or close the app? That outcome becomes the reward signal. Over many tries, the agent picks actions that earn the high rewards more often.

Contextual Bandits: The Lightweight Option

Full RL can feel like rocket science. Contextual bandits trim the complexity by focusing on single-step choices instead of long chains. You give the model context (user tier, device, local time) and a set of possible actions. It returns the one with the best expected reward while still exploring new options here and there. For many loyalty flows, that’s enough.

Why RL Fits Gamification

- Sequential nature: Points today change behavior tomorrow. RL optimizes the long game.

- Personalization at scale: The model treats each session as unique context instead of lumping users into broad buckets.

- Automatic tuning: As offers wear out, the system spots the drop and pivots without a manual rule rewrite.

Building Your First AI Gamification Loop

1. Set One Clear Goal

Pick something observable: “Increase weekly return visits.” Avoid fuzzy wishes like “make users happy.”

2. Identify the Reward Signal

Tie the goal to a simple metric – user logs in again within seven days. The agent can measure success without human judgment.

3. Wire Event Streams

Track the actions that matter: logins, deposits, mission completions. Use the same topic names in dev and prod to keep life simple.

4. Craft a Safe Action Set

Start small: two mission offers, one badge, perhaps a nudge message. Let the agent learn before you hand it the full catalog.

5. Launch a Soft Pilot

Route a small slice of traffic to the new system. Compare engagement to the rule-based control. Because you’re using streams, you can roll back instantly if something looks off.

6. Expand Gradually

Add new actions and richer context as confidence grows. Bandits can handle more arms; deep RL can step in when sequences get long.

7. Monitor and Learn

Look beyond vanity metrics. Did deposit frequency climb? Did support tickets spike? Keep a human in the loop even when the algorithm seems flawless.

Story-Driven Walkthroughs

Scenario A: iGaming Retention

A slots app sees many first-time depositors churn after one day. The team tracks every spin and wager as events. A contextual bandit learns that night-owl players on mobile respond best to quick win streak missions, whereas afternoon desktop players prefer collection badges. After two weeks, night-owl retention improves enough that the team extends RL to all cohorts. No one hard-coded “night owl” as a rule; the pattern emerged from data feedback loops.

Scenario B: E-Commerce Loyalty

An online sneaker store wants to boost repeat purchases. Instead of blanket discounts, it feeds browsing history, cart drops, and email clicks into a bandit. The model tries free shipping, early access, or loyalty points. Over time, size-11 buyers respond to early-access drops, size-7 buyers lean toward points. The store sees more full-price sales, and developers never adjust a single if-statement.

Scenario C: SaaS Onboarding

A B2B analytics tool struggles with trial users who poke around once and disappear. By tracking in-app events – including dashboard views, report exports – it trains a bandit to surface next-step tooltips. Users who export a report receive a “share with a teammate” mission; users who linger on chart settings get a badge for building a custom view. Trial-to-paid conversion ticks up without adding head-count to customer success.

People, Process, and Governance

Roles You’ll Need

- Product Owner: Defines the goals and guards brand tone.

- Data Engineer: Keeps streams flowing, schemas clean.

- ML Engineer or Analyst: Tunes models, reads the reward curves.

- Compliance Lead: Ensures offers remain within legal boundaries, especially for sectors like online casino gaming.

Data Hygiene Principles

- Single Source of Truth: Store raw events once; derive views downstream.

- Immutable Logs: Never rewrite events; append new facts.

- Versioned Schemas: Add fields, don’t mutate them – old consumers keep working.

Bias and Fairness Checks

An RL system optimizes reward, not ethics. Schedule audits: slice performance by age, region, and device. If one group sees more aggressive offers, dig into why before regulators do.

Common Roadblocks and Quick Fixes

Even a robust RL loop benefits from having must-follow guardrails. Think of them as seat belts.

The Ethical Angle

Real-time personalization carries power. Use it wisely:

- Transparency: Let users know missions adapt to their behavior.

- Opt-out paths: Don’t trap anyone in perpetual quests.

- Responsible play: In iGaming, let responsible-play settings feed the model so it reduces offers when risk rises.

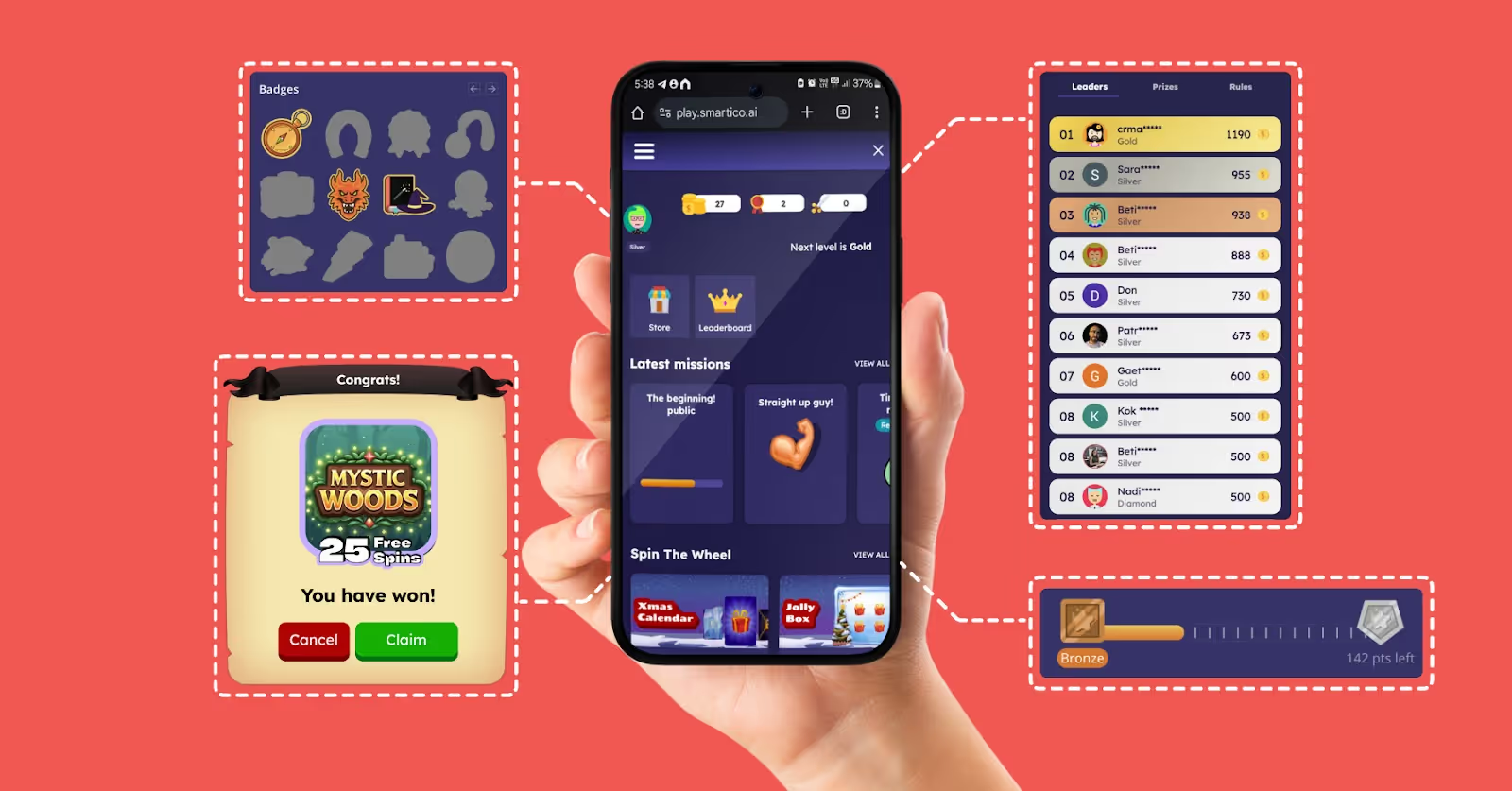

Smartico.ai: One Platform, All the Pieces

Smartico.ai combines the plumbing – event streams, rule engine, reinforcement learning workbench – into a single interface.

Highlights:

- Real-time orchestration across campaigns, bonuses, and mini-games.

- Built-in AI models: Segment churn risk and suggest missions automatically.

- Gamification with iGaming roots, yet flexible enough for fintech or e-commerce.

Curious? A Request Demo button sits right below. One click shows how the theory plays out live.

{{cta-banner}}

Frequently Asked Questions

1. Do I need a large data-science team to start?

No. Small teams can begin with contextual bandits via open-source libraries and grow into deeper RL later.

2. How soon will we see results?

Early pilots often surface trends within a month, but timelines vary by traffic volume and reward clarity.

3. Can the algorithm harm brand tone?

The model picks from your approved actions and content. Keep creative reviews in place, and tone stays intact.

4. What if a user opts out of personalization?

Fallback rules can serve generic offers. Respecting opt-out is a legal and ethical must-do.

5. Is this approach safe for regulated markets?

Yes – provided you log every decision, keep consent flags in the event stream, and let compliance teams replay history during audits.

Closing Thoughts

AI gamification CRM loops replace paint-by-numbers loyalty with living systems that learn. You don’t need esoteric math or endless dashboards to get started – only clear goals, clean events, and a small action set. From there, the loop does the heavy lifting, nudging each user toward deeper engagement.

Did you find this article helpful? If so, consider sharing it with other industry professionals such as yourself.

Ready to use Smartico?

Join hundreds of businesses worldwide engaging players with Smartico.