The 7 Golden Rules of A/B Testing in iGaming

You know that feeling when you change something on your casino site and you're not quite sure if it's actually working better? Maybe you tweaked the welcome bonus messaging, or you changed the color of your deposit button, or you restructured your loyalty program emails. And now you're just... hoping it worked?

That's where A/B testing comes in. And in iGaming, where player lifetime value can swing wildly based on tiny friction points, getting this right isn't optional anymore.

A/B testing in iGaming is about making decisions based on data instead of gut feelings. It's the difference between knowing your new VIP tier structure converts 23% better and just thinking it "feels right." But here's the thing: most online casino operations are doing it wrong. They're testing random stuff without strategy, looking at vanity metrics, or – worst of all – not testing at all.

Let's fix that. Here are the seven golden rules that'll help you run A/B tests that actually move the needle.

Rule 1: Test One Variable at a Time (Or You'll Learn Nothing)

This is A/B testing 101, but you'd be surprised how many iGaming operators break this rule.

Imagine you decide to test a new welcome email. You change the subject line, rewrite the body copy, swap out the CTA button color, and adjust the send timing. The test shows a 15% lift in click-through rates. Great, right?

Wrong. You have no idea what caused that lift.

Was it the snappier subject line? The red button instead of blue? The fact that you sent it at 7 PM instead of noon? You just ran four tests at once and learned nothing useful that you can apply elsewhere.

The fix is simple: isolate variables. Test the subject line first. Once you have a winner, test the CTA color. Then test the timing. Yeah, it takes longer. But you'll actually know what works and why.

This applies to everything in your CRM automation. If you're testing a new gamification feature, don't also change the reward structure and the UI at the same time. One variable per test. Always.

Rule 2: Statistical Significance Isn't Optional

Let's say you run a test on two different bonus offers. Option A gets 47 conversions. Option B gets 52 conversions. You declare Option B the winner and roll it out to everyone.

The problem with such a small sample size is that difference might just be random noise. Maybe if you'd run the test longer, Option A would've caught up or even won.

Statistical significance tells you whether your results are real or just coincidence. In iGaming, where player behavior can be volatile (someone hits a big win and suddenly they're depositing way more than usual), you need enough data to smooth out those random spikes.

{{cta-banner}}

Most A/B testing tools will calculate significance for you, but as a general rule, you want at least 95% confidence before calling a winner. And you need a decent sample size – typically at least a few hundred conversions per variant.

According to a Harvard Business Review study on A/B testing, a single Microsoft Bing experiment that tested ad headline changes resulted in a 12% revenue increase worth over $100 million annually. The study found that leading companies conducting thousands of rigorous tests see dramatically better outcomes than those relying on intuition alone.

Don't rush it. Let your tests run until they hit significance, even if it takes weeks. A wrong conclusion is worse than a slow one.

Rule 3: Know What You're Actually Measuring

Click-through rate. Conversion rate. Engagement rate. Revenue per user. Retention rate. Churn rate.

There are dozens of metrics you could track in an A/B test. But here's the question: which ones actually matter for your business goals?

Too many operators get excited about improving a metric that doesn't really move their bottom line. Sure, your new email template gets 30% more opens. But if those extra opens don't translate into deposits, does it matter?

You need to identify your North Star metric – the one number that truly indicates success. For most iGaming operations, that's probably something like player lifetime value or net revenue. Everything else is just a means to that end.

Here's how to think about it:

Primary metrics (what you're trying to improve):

- Deposit conversion rate

- Average deposit amount

- Player lifetime value

- Long-term retention (30-day, 90-day)

- Net revenue per player

Secondary metrics (supporting indicators):

- Email open rates

- Click-through rates

- Session frequency

- Time on site

- Bonus redemption rates

Guardrail metrics (making sure you're not breaking something):

- Support ticket volume

- Complaint rates

- Unsubscribe rates

- Account closure rates

Before you start any test, write down which metric you're trying to improve and why it matters to your business. If you can't articulate that, you're not ready to test yet.

Rule 4: Segment Your Players (Because Not Everyone's the Same)

Here's a reality check: your high-roller who deposits $5,000 a month and your casual player who throws in $20 on Friday nights are not the same person. They don't respond to the same messages, they don't care about the same rewards, and they definitely shouldn't be in the same A/B test.

When you run a test across your entire player base, you're getting averaged results that might hide what's really happening. Maybe your new loyalty program crushes it with mid-tier players but completely flops with VIPs. If you're only looking at the aggregate numbers, you might see a modest 5% improvement and call it a win – missing the fact that you just alienated your most valuable segment.

Smart A/B testing in iGaming means testing within segments:

- New players vs. returning players

- Low-value vs. high-value players

- Different game preferences (slots vs. table games vs. sports betting)

- Geographic regions (if you operate in multiple markets)

- Engagement levels (active vs. at-risk vs. dormant)

You might find that personalization based on these segments delivers way better results than any single "winning" variant could. And that's actually the whole point of modern CRM for iGaming – treating different players differently based on what actually motivates them.

Rule 5: Give Your Tests Enough Time to Breathe

Monday's iGaming traffic looks different from Friday's. The first week of the month behaves differently than the last week (hello, payday patterns). And player behavior right after a big sports event is nothing like a random Tuesday.

If you run your A/B test for three days and call it done, you're probably just measuring noise.

Your tests need to run long enough to account for these patterns. At minimum, you should run tests for at least one full week to capture weekly cycles. Better yet, run for two weeks or even a full month if you're testing something with longer-term implications like loyalty program changes.

This is especially important for CRM automation tests. If you're testing a re-engagement email series that plays out over 14 days, you can't evaluate it after 3 days. You need to see the full journey through.

There's also the novelty effect to consider. Sometimes players respond positively to something new just because it's different, not because it's better. That initial spike might fade after a few days once the novelty wears off. Running tests longer helps you identify whether you've got a sustainable improvement or just a temporary bump.

Rule 6: Don't Ignore the Losers (They're Just as Valuable)

Let's talk about something that doesn't get enough attention: failed tests.

You tested a new welcome bonus structure. It lost by 8%. You tested a different email subject line approach. It lost by 12%. You tested a gamification feature. It barely moved the needle.

Most teams just shrug, archive those results, and move on to the next test. That's a huge mistake.

Your losing tests are telling you something important about your players. They're showing you what doesn't work, which is just as valuable as knowing what does. More importantly, they're helping you build a mental model of what actually motivates your audience.

When a test fails, dig into the why:

- Did it fail across all segments or just specific ones?

- Was there a particular element that players rejected?

- Did it conflict with something else in the experience?

- What assumptions did you make that turned out to be wrong?

Document your losing tests just as thoroughly as your winners. Over time, you'll start to see patterns. Maybe your players consistently reject gamification elements that feel too "game-like" but respond well to progress tracking. Maybe they love personalized bonuses but hate generic promotional emails.

This knowledge compounds. Each test – win or lose – makes your next test smarter.

Rule 7: Have a Hypothesis Before You Hit "Start"

Random testing is just expensive guessing.

Before you launch any A/B test, you should be able to fill in this sentence: "I believe that [change] will result in [outcome] because [reason based on data or player behavior]."

For example:

- "I believe that adding a progress bar to our loyalty program emails will increase click-through rates by 15% because players respond well to visual indicators of achievement based on our previous gamification tests."

- "I believe that sending re-engagement emails at 6 PM instead of 10 AM will improve open rates by 20% because our analytics show peak player activity happens in the evening."

Notice what these hypotheses have in common? They're specific, measurable, and based on existing knowledge about player behavior.

A good hypothesis does three things:

- It focuses your test - You know exactly what you're trying to prove or disprove

- It sets success criteria - You know what "better" looks like before you start

- It builds institutional knowledge - Even if you're wrong, you learn something about your assumptions

Without a hypothesis, you're just throwing spaghetti at the wall. With one, you're systematically learning what makes your players tick.

How Smartico.ai Powers Smarter A/B Testing

So you understand the rules. Now here's the reality: following them manually is a nightmare.

That's where a unified Gamification CRM like Smartico.ai makes the difference. Founded in 2019, Smartico.ai became the first and leading solution to combine real-time gamification mechanics with CRM Automation specifically built for iGaming operations.

The software handles the heavy lifting of A/B testing across your entire player journey. You can test different gamification mechanics, loyalty program structures, personalized bonus offers, and communication strategies – all from one central system. The real-time data engine tracks player behavior across every touchpoint, so you're not guessing about what works.

What makes this particularly powerful for A/B testing is the personalization engine. You can run segmented tests automatically, comparing how different player groups respond to different approaches. High-rollers get one test variant, casual players get another, and the system tracks which combinations drive the best lifetime value.

The CRM automation tools also let you test complex, multi-touch campaigns without manually managing dozens of email variants and triggers. You can test an entire re-engagement sequence against a control group, measure the results at each stage, and optimize based on what actually converts players – not just what gets opens.

And because it's purpose-built for iGaming, you're not trying to force a generic marketing automation tool to understand the nuances of player retention, responsible gaming considerations, and regulatory requirements. It just works the way iGaming operations need it to work.

Want to find out how Smartico can help drive retention and loyalty for your business specifically like nothing you’ve tried before? Book your free, in-depth demo below.

{{cta-banner}}

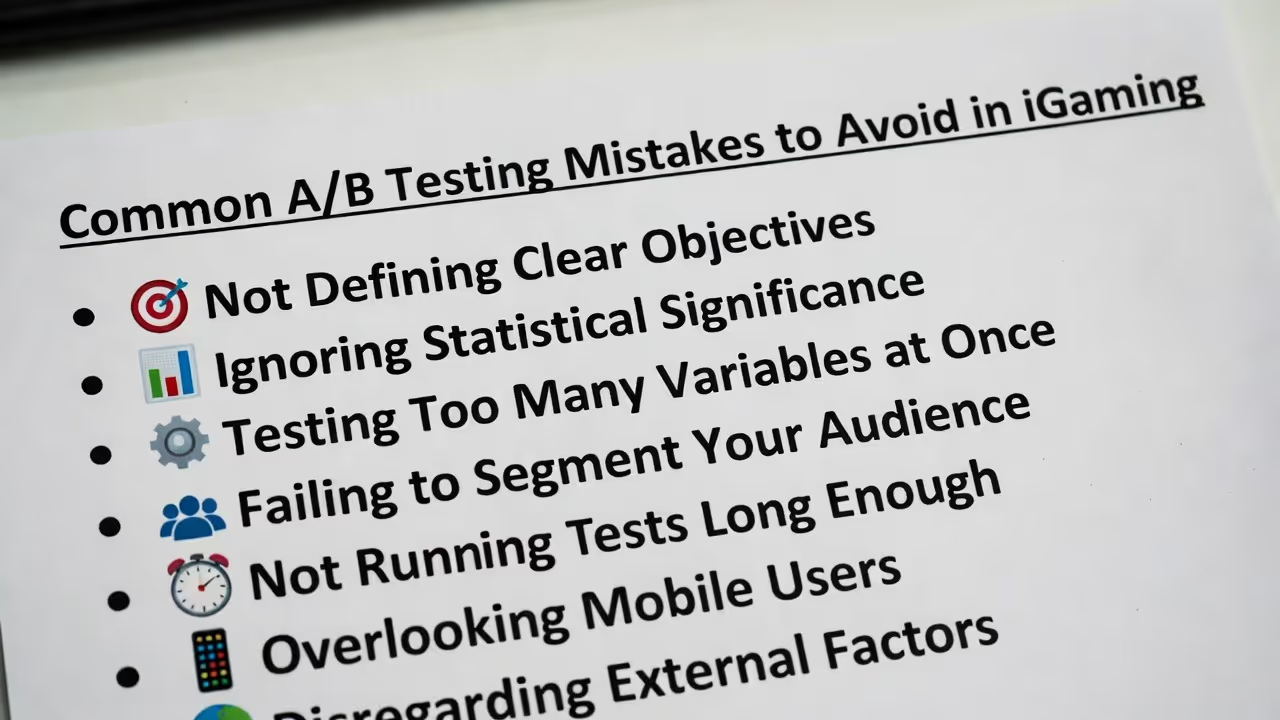

Common A/B Testing Mistakes to Avoid in iGaming

Even when you know the rules, it's easy to stumble. Here are the most common mistakes we see:

1. Testing too many things at once

You're eager to optimize everything. We get it. But running 15 simultaneous tests dilutes your traffic and makes it harder to reach statistical significance. Focus on your highest-impact opportunities first.

2. Stopping tests too early

You see promising results after two days and pull the trigger. Then the effect disappears when you roll it out to everyone. Patience pays off in A/B testing.

3. Ignoring mobile vs. desktop differences

Your desktop experience might test beautifully, but if 70% of your players are on mobile and you didn't segment that traffic, you're missing the full picture.

4. Not accounting for seasonality

Running a test during a major sporting event or holiday season can skew your results. Player behavior during these periods isn't representative of normal days.

5. Testing cosmetic changes while ignoring fundamental friction

Tweaking button colors is easy. Fixing a clunky registration flow is hard. Don't use A/B testing as an excuse to avoid solving real UX problems.

6. Forgetting about the player experience

Just because Test Variant B converted 5% better doesn't mean it's good for long-term player satisfaction. Always consider the bigger picture beyond immediate conversion metrics.

Building Your A/B Testing Roadmap

Now that you've got the rules down, you need a system. Here's how to build an A/B testing program that actually produces results:

Start with your biggest pain points. Where are players dropping off? Where is engagement lowest? What's your biggest retention challenge? Those are your testing priorities.

Create a backlog of hypotheses. Don't just test random ideas. Build a prioritized list based on potential impact and ease of implementation. Test high-impact, easy wins first to build momentum.

Establish a testing calendar. Schedule your tests so they don't overlap in ways that contaminate results. Leave enough time between tests to implement winners and measure their sustained impact.

Build a knowledge base. Document every test – hypothesis, methodology, results, insights, and decisions. Make this accessible to your whole team so everyone can learn from past tests.

Review and iterate regularly. Set aside time monthly or quarterly to analyze patterns across all your tests. What's working? What's not? How can you refine your approach?

The operators who win at A/B testing aren't the ones running the most tests. They're the ones running the smartest tests and actually learning from them.

FAQ

How long should I run an A/B test in iGaming?

At minimum, run tests for one full week to account for daily behavior patterns. For more significant changes like loyalty program restructures or major CRM automation flows, aim for 2-4 weeks. Always wait until you hit statistical significance (95% confidence) before declaring a winner, even if it takes longer than planned.

What's the minimum sample size needed for reliable A/B test results?

You generally need at least 100-200 conversions per variant to start seeing reliable patterns, though more is better. The exact number depends on your baseline conversion rate and the size of the effect you're trying to detect. Most A/B testing tools include sample size calculators to help you determine this upfront.

Can I run multiple A/B tests at the same time?

Yes, but be strategic about it. Make sure your tests aren't interfering with each other (for example, don't test email subject lines and send times simultaneously). Also ensure you have enough traffic to properly power each test. Running too many concurrent tests can dilute your traffic and extend the time needed to reach significance.

Should I test on all players or just specific segments?

Both approaches work depending on your goals. If you're testing something universal like a site-wide navigation change, test across all players. For targeted initiatives like VIP program updates or specific game promotions, test within the relevant segment. Segmented testing often reveals more actionable insights since different player groups behave differently.

What if my A/B test shows no significant difference between variants?

This is actually valuable information – it tells you that the change doesn't matter to players, so you can move on to testing something else. It might also suggest that your hypothesis was wrong or that you need a more dramatic change to move the needle. Document the null result and use it to inform future tests.

How do I know which metrics to prioritize in my A/B tests?

Focus on metrics that directly impact your business goals, typically player lifetime value, retention rates, or net revenue. Avoid getting distracted by vanity metrics like page views or email opens unless they clearly correlate with revenue. Your North Star metric should answer the question: "If this number goes up, does our business actually improve?"

Your Next Move

A/B testing in iGaming is all about systematically learning what motivates your players, what reduces friction, and what actually drives long-term value.

Follow these seven rules and you'll stop making decisions based on hunches. You'll know – really know – what works for your players. And in an industry where the difference between a retained player and a churned player can be a single frustrating experience, that knowledge is everything.

Did you find this article helpful? If so, consider sharing it with other industry professionals such as yourself.

Ready to use Smartico?

Join hundreds of businesses worldwide engaging players with Smartico.